California is taking action as AI chatbots become increasingly common and increasingly convincing. Starting this year, two new laws aim to make AI interactions safer and more transparent for users, with a special focus on protecting children.

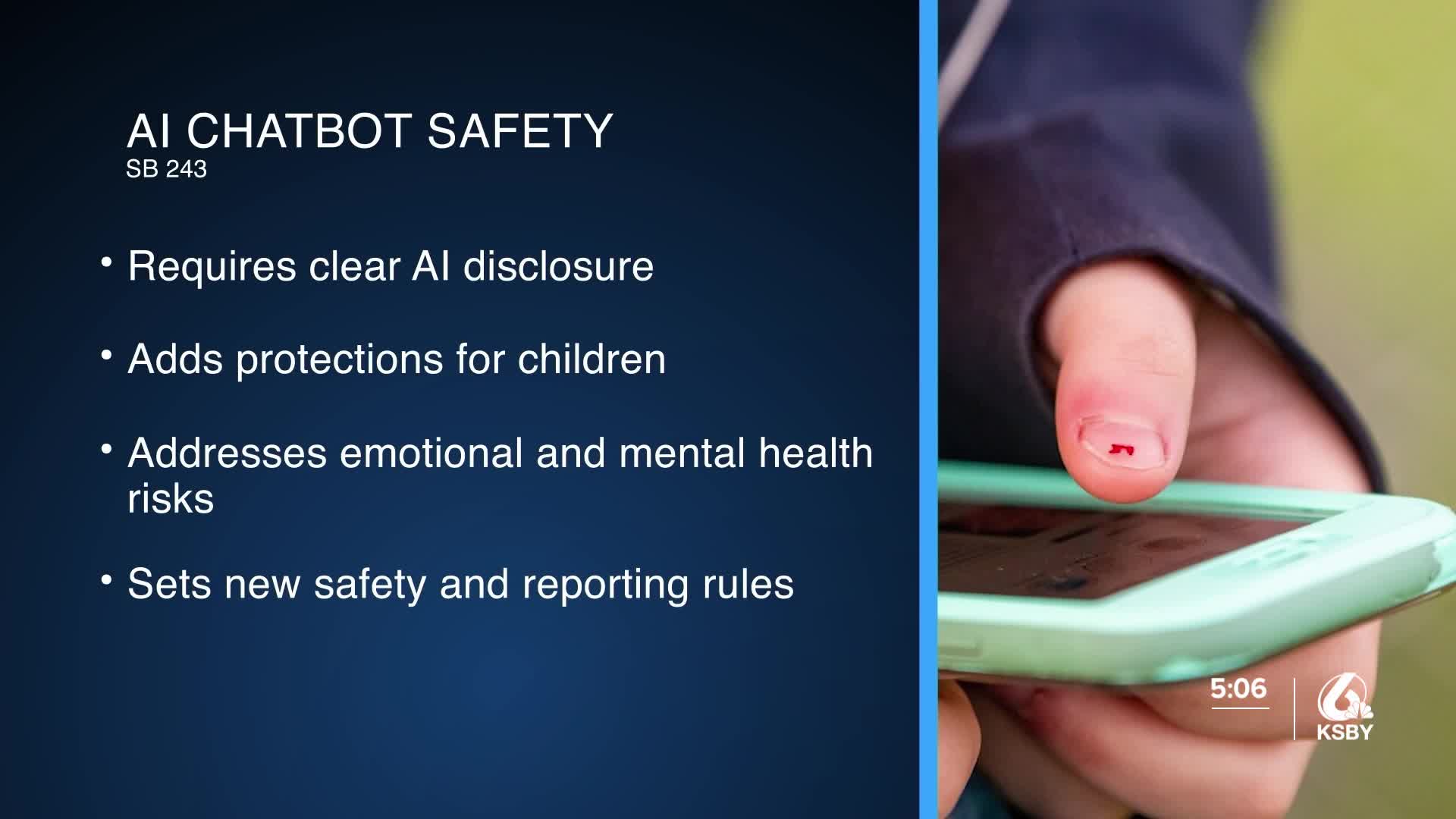

One of the new laws, SB 243, requires companies to clearly disclose when a user is interacting with an AI system, rather than a real person. Lawmakers say children can form emotional connections with chatbots, sometimes believing there is a real relationship on the other end.

The law also adds stronger safeguards for kids, including warnings designed to prevent harmful or misleading interactions and address mental health concerns. Some platforms will also need to follow new reporting and safety standards. State leaders say the goal is simple: make sure users know who, or what, they are talking to.

The second law, AB 489, bans AI chatbots from posing as licensed professionals, including doctors, nurses, or therapists. The measure is designed to prevent users from receiving misleading or dangerous advice.

Under the law, AI systems can no longer present themselves as medical or mental health professionals, and California’s health licensing boards, like the Medical Board and the Board of Registered Nursing, can take action against companies that misrepresent AI as a qualified expert. Systems like chatbots have already been used in ways that seem therapeutic but lack legal or medical backing.